Meta Tightens Safeguards - Teens Across Instagram, Facebook, and Messenger

Adshine.pro09/26/20257 views

Adshine.pro09/26/20257 viewsMeta has confirmed that it is expanding its protections for teen accounts across Instagram and Facebook to every young user worldwide, a move designed to bolster safeguards for minors while offering parents greater reassurance.

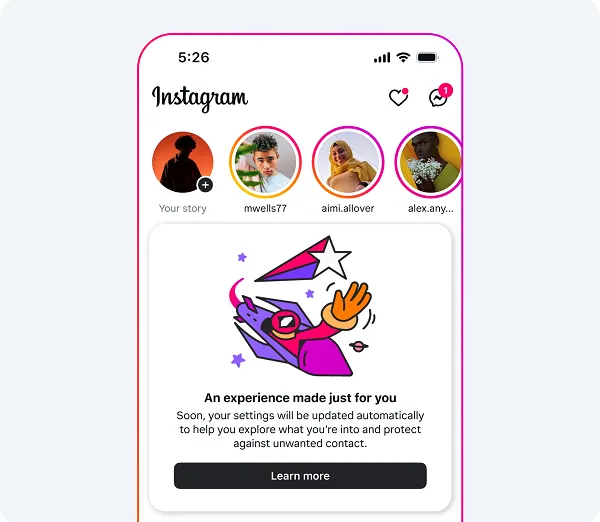

The system, first launched in the U.S. last year, automatically limits interactions between teen users and certain accounts, restricts what content under-18s can view, and introduces alerts about time spent on the apps.

Now, Meta is rolling out these measures globally as part of its broader effort to strengthen protections within its platforms.

As the company explained:

“A year ago, we introduced Teen Accounts — a significant step to help keep teens safe across our apps. As of today, we’ve placed hundreds of millions of teens in Teen Accounts across Instagram, Facebook, and Messenger. Teen Accounts are already rolled out globally on Instagram and are further expanding to teens everywhere around the world on Facebook and Messenger today.”

In practice, this means every teen user on Meta’s major platforms will now be covered by the new restrictions and protections.

The obvious challenge is that young people can misreport their age to avoid such safeguards. Meta says it is addressing this through improved age-detection technology, drawing on factors such as who follows you, who you follow, the type of content you engage with, and more.

These systems, powered by Meta’s developing AI models, have made it harder for teens to bypass controls, creating a more robust safety net. The push is not only about protecting younger users but also about meeting increasing regulatory pressure. Several countries, including France, Greece and Denmark, are advocating for EU-wide restrictions, while Spain is considering limiting access to those over 16. Australia, New Zealand, and Norway are also preparing their own rules.

It seems only a matter of time before stricter controls on teen social media use become widespread. By enhancing its safeguards now, Meta is positioning itself ahead of these legislative shifts, potentially insulating itself from harsher measures.

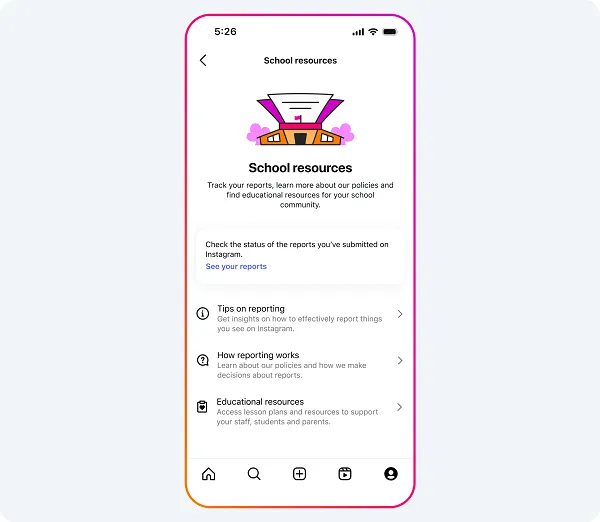

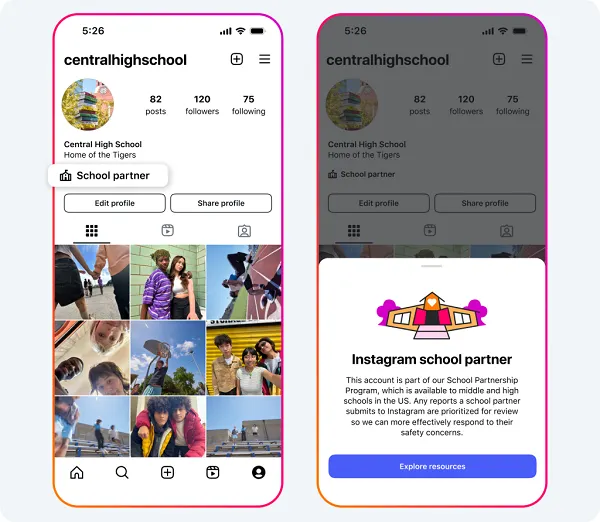

Beyond platform-level protections, Meta is introducing a new School Partnership Program for all U.S. middle and high schools, allowing educators to flag safety concerns directly to the company for expedited review.

“This means that schools can report Instagram content or accounts that may violate our Community Standards for prioritized review, which we aim to complete within 48 hours. We piloted this program over the past year and opened up a waitlist for schools to join in April. The program has helped us quickly respond to educators’ online safety concerns, and we’ve heard positive feedback from participating schools.”

Schools that participate will also be able to display a banner on their Instagram profiles, signaling to parents and students that they are official partners in online safety.

In addition, Meta has teamed up with Childhelp to develop an online safety curriculum tailored for middle school students, with the goal of reaching one million young learners by next year.

Taken together, these initiatives reflect Meta’s bid to better protect teens, while also promoting digital literacy and reducing risks within its apps.

Whether this will be enough to stave off looming regulatory action is uncertain. But at the very least, Meta is aligning itself more closely with public and political expectations — and giving itself a stronger position in the debates that lie ahead.

📢 If you're interested in Facebook Ads Account, don't hesitate to connect with us!

🔹 https://linktr.ee/Adshinepro

💬 We're always ready to assist you!