Meta Steps Up Teen Safety with New Age Checks

Adshine.pro09/23/20254 views

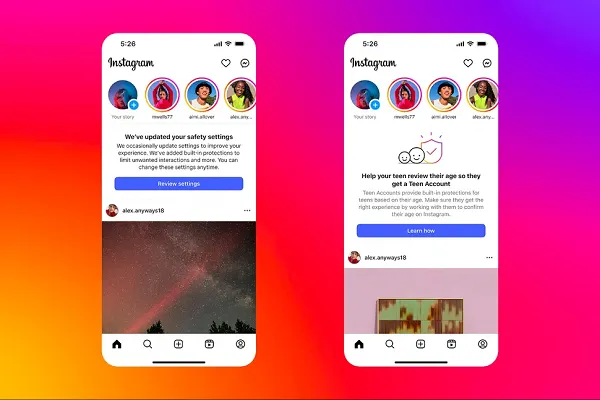

Adshine.pro09/23/20254 viewsInstagram is stepping up its teen protection measures, with enhanced AI-driven age detection and the expansion of its teen account protections to users in Canada.

According to a report from Android Central, Instagram’s upgraded detection system will now automatically restrict certain interactions if it determines that an account holder is under 18—even in cases where the user has attempted to bypass safeguards by entering an adult birth date.

This marks the latest evolution in Meta’s age-detection technology, which draws on a range of signals to identify user age. These include who follows and engages with the account, the type of content a user interacts with, and even contextual clues such as birthday messages from friends.

Meta concedes the system is not flawless, and mistakes will occur. Users flagged incorrectly will have the option to appeal or update their age details. Still, the company insists its detection methods are improving and becoming more adept at identifying underage usage patterns.

As part of this push, Meta will now default Canadian teens under 16 into its advanced security mode—mirroring protections already in place for U.S. teens since last September. This setting can only be switched off by a parent.

The move comes amid growing global scrutiny of teen safety on social platforms. Over the past year, several European nations, including France, Greece, and Denmark, have voiced support for laws that would bar younger teens from accessing social media entirely. Spain is weighing a minimum age of 16, while Australia and New Zealand are preparing their own restrictions, and Norway is in the process of drafting new rules.

The trajectory seems clear: restrictions on teen social media access are likely to become more widespread. The real challenge, however, lies in enforcement—and in establishing legal accountability for platforms to uphold these rules.

At present, each platform relies on its own detection mechanisms, and while Instagram’s AI system appears to be among the more advanced, there is no universal industry benchmark for accuracy or compliance. Regulators are still exploring alternatives, such as third-party selfie verification, though such approaches raise their own privacy concerns.

In Australia, for example, regulators recently tested 60 different age-verification systems from multiple providers. The results showed that while several were relatively effective, none were error-free—especially when users were within two years of the 16-year-old threshold.

So far, Australia’s proposed legislation simply requires platforms to take “all reasonable steps” to remove under-16 accounts, without setting a binding accuracy standard. Without a universally recognized system, enforcement remains murky and could undermine the effectiveness of such laws.

This is why Meta’s work in refining AI-based verification may prove pivotal. If its methods continue to improve and demonstrate reliable results, they could form the basis of an industry-wide standard for teen protection online.

For now, Instagram’s expansion of these measures to Canada represents another incremental but important step toward a safer digital environment for young users.

📢 If you're interested in Facebook Ads Account, don't hesitate to connect with us!

🔹 https://linktr.ee/Adshinepro

💬 We're always ready to assist you!