Meta Claims Victory as Community Notes Show Tangible Impact on Misinformation

Adshine.pro05/30/202520 views

Adshine.pro05/30/202520 viewsMeta has reported that recent changes to its moderation and enforcement policies — including the integration of Community Notes — are showing promising results. According to its latest transparency reports, enforcement errors in the United States have been reduced by 50%.

This reduction aligns with one of Meta’s core goals in shifting moderation strategies: allowing more speech on its platforms and reducing unnecessary restrictions caused by internal rule enforcement. Community Notes play a key role in this by giving users more say in determining what content should or shouldn’t be restricted, effectively enabling Meta to pull back on direct moderation.

This shift appears to be having some impact. However, the tradeoff could be a wider spread of misinformation, since a decrease in enforcement mistakes may also indicate less aggressive enforcement overall.

These insights are part of a broader set of moderation performance data released by Meta. The full report covers a range of topics including content removals, government information requests, and the most widely viewed content across its platforms.

When it comes to Community Notes specifically, Meta has expanded the feature. Users can now write notes on Reels and replies within Threads. There’s also a new option to request a community note, further broadening user participation in the moderation process.

The concept of Community Notes has merit, particularly in democratizing content governance. It empowers users to influence what is and isn’t acceptable within the platform ecosystem. However, the effectiveness of this approach hinges on execution — especially visibility.

For example, studies have shown that a Community Note can significantly reduce the spread of misinformation when it’s displayed on a post. But that impact is only felt if the note is actually shown. A critical issue, observed on platform X, is that around 85% of Community Notes are never displayed to users. This is largely due to the requirement that contributors from opposing political perspectives must agree on the content of a note — a consensus that’s difficult to reach on polarizing topics.

If notes aren’t shown, they can’t limit the reach of misleading content. Similarly, moderation mistakes that go unnoted can’t be flagged or corrected. So while Meta’s data on reduced enforcement errors may seem positive on the surface, it could also mask underlying issues of reduced visibility and inaction.

Put simply, a 50% drop in enforcement mistakes may not be the purely positive indicator that Meta suggests.

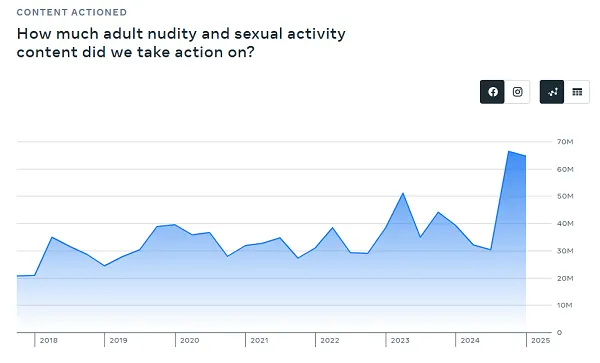

In addition, Meta’s reports show a noticeable increase in cases involving nudity and sexual content on Facebook recently, pointing to other evolving challenges in its moderation effort

While content removed under its “dangerous organizations” policy has increased in IG (Meta says this was due to a bug which is being addressed):

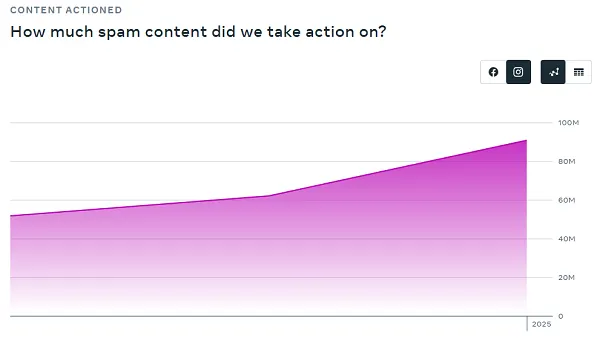

More spam is also being removed on Instagram:

And concerningly, suicide, self-injury and eating disorder content has also increased:

Some of these developments can be attributed to advancements in Meta’s detection technology, which now increasingly leverages large language models (LLMs) to enhance its capabilities.

According to Meta:

“Early tests suggest that LLMs can perform better than existing machine learning models, or enhance existing ones. Upon further testing, we are beginning to see LLMs operating beyond that of human performance for select policy areas.”

However, other trends may reflect broader concerns within these content categories.

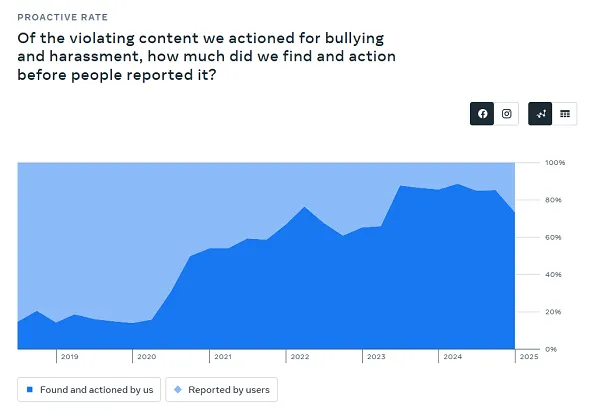

Equally troubling is a key downside of the new moderation strategy: automated detection of bullying and harassment has declined by 12%, highlighting a potential gap in enforcement as Meta continues to shift toward a more automated, user-guided model.

Could this be happening because Meta is shifting more responsibility to users, effectively allowing more of this content to slip through by relying on user reports?

To be fair, Meta has acknowledged that part of the decline may stem from its decision to scale back automated detection in areas with high false positive rates. The aim is to refine and enhance the accuracy of its models. So, multiple factors could be contributing to the drop—but it does raise concerns that more problematic content might be slipping through the cracks as a result.

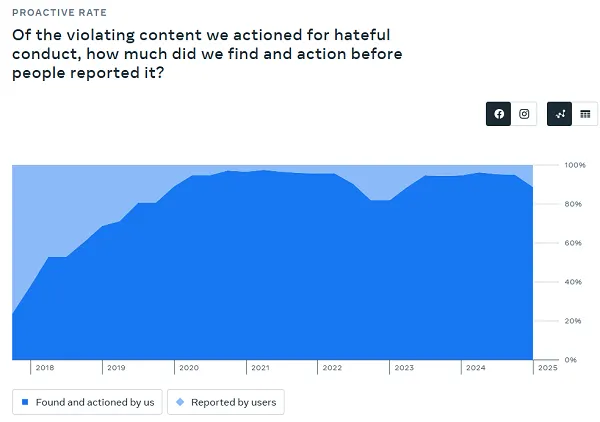

Additionally, Meta has reported a 7% decrease in the proactive detection of hateful conduct, further underscoring potential gaps in its evolving moderation approach.

So, these incidents are still occurring—it’s just that Meta’s proactive detection appears to have become less sensitive. Meta is also shifting some of the moderation burden to Community Notes to help manage reports.

Is that truly a step forward? It’s hard to say. But it clearly reflects a shift in strategy, one that may be allowing more harmful content to circulate within its apps. And considering the sheer scale of Meta’s platforms, even a small percentage drop in detection could translate into thousands—or even millions—of additional harmful incidents.

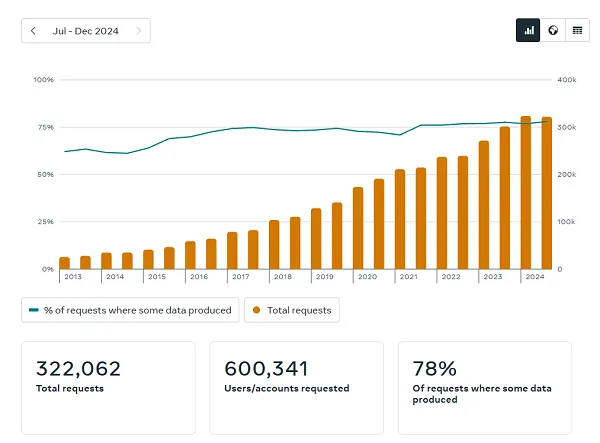

As for government information requests, Meta’s response rate has stayed relatively consistent compared to its previous report.

Meta reports that India remained the leading country for government data requests this quarter, showing a 3.8% increase. The United States, Brazil, and Germany followed closely behind.

On the fake account front, Meta estimates that such profiles now make up around 3% of Facebook’s global monthly active users. That’s notably lower than the industry-standard 5% figure Meta has typically cited in the past—indicating a possible boost in confidence around its detection capabilities.

Of course, actual user experiences may vary.

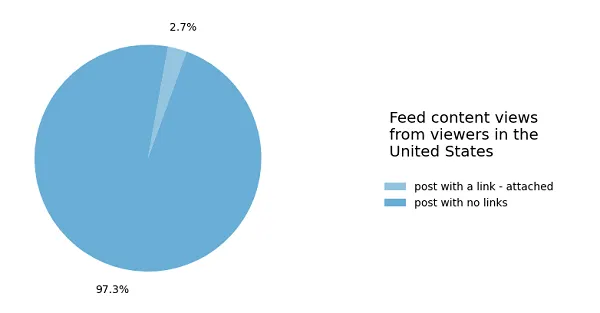

As for what people are seeing most on the platform, the data on widely viewed content still paints a bleak picture for publishers and anyone hoping to drive meaningful referral traffic from Facebook.

In Q1 2025, 97.3% of post views on Facebook in the U.S. did not contain a link directing users outside the platform. That’s a slight uptick in link-based posts—an increase of just 0.6% compared to the previous report.

If you were hoping that Meta’s loosened stance on political content might signal a return to greater reach for publishers, the data doesn’t support that. The numbers suggest external content still isn’t making much headway in the feed.

That said, some publishers have anecdotally reported seeing more referral traffic from Facebook this year, so results may vary by outlet.

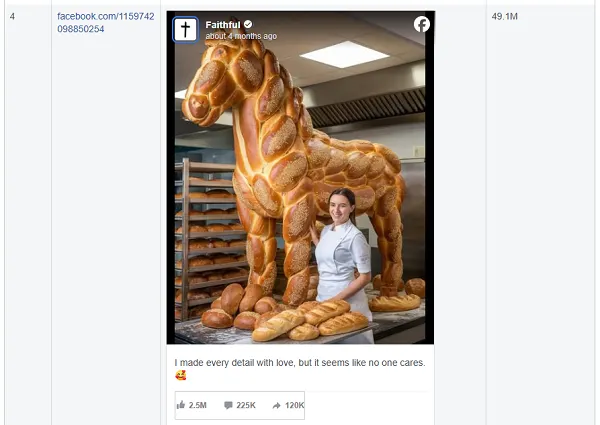

As for what’s actually getting views on the platform? Unsurprisingly, low-effort AI-generated content—often called “AI slop”—continues to dominate.

Alongside that, we’re still seeing the familiar blend of tabloid-style celebrity news and viral content dominating the platform.

So, is Facebook actually improving under the Community Notes system?

That really depends on which metrics you prioritize. Some of the available data points to potential benefits from the shift in moderation strategy—but what’s not included in the data may be just as telling.

Overall, there have been some troubling trends in how Meta responds to certain types of content. These shifts could imply that more harmful material is slipping through the cracks.

Still, we can’t know for sure. These reports only show the actions that were taken, not the ones that weren’t. And without a broader context, it’s impossible to say definitively whether this new approach is yielding a net positive outcome.

📢 If you're interested in Facebook-related solutions, don't hesitate to connect with us!

🔹 https://linktr.ee/Adshinepro

💬 We're always ready to assist you!