Artificial Intelligence Takes the Lead in Meta’s UX Decisions

Adshine.pro06/02/20255 views

Adshine.pro06/02/20255 viewsAs Meta CEO Mark Zuckerberg recently noted in a company update, AI is playing an increasingly central role across Meta’s operations—from automating code to refining ad targeting, evaluating platform risks, and beyond.

This role is about to expand significantly. According to a new report from NPR, Meta is preparing to use AI for up to 90% of all risk assessments across its key platforms—Facebook and Instagram. This includes risk evaluations for new product features and policy updates.

NPR explains:

“For years, when Meta launched new features for Instagram, WhatsApp and Facebook, teams of reviewers evaluated possible risks: Could it violate users’ privacy? Could it cause harm to minors? Could it worsen the spread of misleading or toxic content? Until recently, what are known inside Meta as privacy and integrity reviews were conducted almost entirely by human evaluators, but now, according to internal company documents obtained by NPR, up to 90% of all risk assessments will soon be automated.”

This shift raises some concerns, given that it transfers critical safety and integrity responsibilities from human reviewers to AI systems—placing significant trust in automation to prevent misuse and harm.

But Meta appears confident in its systems’ capabilities. The company highlighted progress in this area in its recently released Q1 Transparency Report, showcasing improvements in AI moderation.

Earlier this year, Meta also introduced a revised enforcement strategy, especially around “less severe” policy violations. The goal: to reduce false positives and prevent over-enforcement by the system.

As part of this approach, Meta says it now disables certain automated systems when they’re found to be generating too many mistakes. The company also confirmed it’s:

“…getting rid of most [content] demotions and requiring greater confidence that the content violates for the rest. And we’re going to tune our systems to require a much higher degree of confidence before a piece of content is taken down.”

In simple terms, Meta is dialing back the aggressiveness of its moderation systems to avoid taking down content without solid evidence that it breaks the rules.

Reportedly, this has led to a 50% drop in moderation errors—seemingly a win for accuracy. But it also opens the door for more borderline or harmful content to remain online, as flagged content must now meet a higher threshold before enforcement.

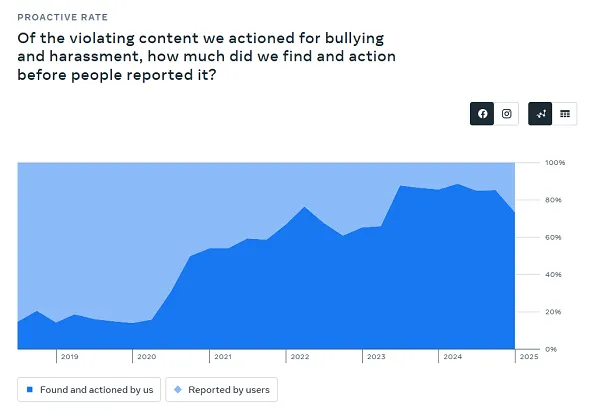

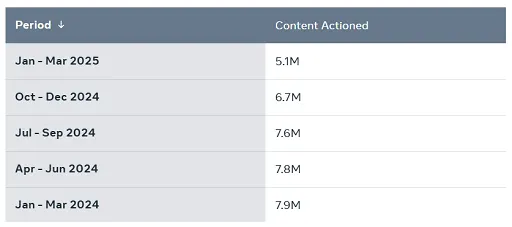

That trade-off is also visible in Meta’s latest enforcement figures.

As illustrated in this chart, Meta's automated detection of bullying and harassment on Facebook dropped by 12% in Q1. That decline directly reflects the company’s updated moderation strategy—resulting in more of this content slipping through the cracks.

At first glance, a 12% drop might not seem dramatic. But when translated into actual figures, it represents millions of posts that would have previously been flagged and removed, now remaining visible. That’s millions of potentially harmful comments being served to users—an unavoidable consequence of Meta’s shift in enforcement priorities.

The potential implications here are considerable, as Meta continues to shift more responsibility to AI systems for interpreting and enforcing its policies. The aim is to enhance efficiency and scale its moderation capabilities more effectively.

But will this approach prove successful? At this stage, it's still uncertain. This is only one component of Meta’s broader strategy to embed AI across its operations—particularly in how it evaluates and implements rules to safeguard its billions of users.

Mark Zuckerberg has already indicated that, within the next 12 to 18 months, the majority of Meta’s evolving codebase will be generated by AI. That’s a more straightforward application of machine learning—analyzing large volumes of code and generating solutions based on logic and learned patterns.

However, applying AI to policy enforcement—decisions that directly shape the user experience—introduces more complexity and risk. These are sensitive areas where nuance, context, and ethical considerations play a critical role, and machines may not always get it right.

Responding to NPR’s report, Meta emphasized that human oversight will remain part of the product risk review process, and that only “low-risk decisions” are currently being automated. Still, this represents a glimpse into a future where AI is increasingly responsible for decisions that affect real people in real time.

Is this the right direction for platforms at this scale?

It could be—eventually. But given the potential for far-reaching consequences, there’s still a significant level of risk involved if AI systems misinterpret or mishandle key decisions. For now, the balance between automation and human judgment remains a delicate one.

📢 If you're interested in Facebook-related solutions, don't hesitate to connect with us!

🔹 https://linktr.ee/Adshinepro

💬 We're always ready to assist you!