Meta Deletes Billions of Bogus Profiles

Adshine.pro12/12/20257 views

Adshine.pro12/12/20257 viewsMeta is now touting its transition toward a Community Notes-style moderation system as a win, according to the latest update in its Community Standards Enforcement Report. The company claims the new approach is helping it strike a better balance between moderation and user autonomy across its platforms.

If you’ve been following the story, you’ll remember that back in January, Meta announced it would abandon its long-standing third-party fact-checking framework and instead embrace a user-driven model inspired by X’s Community Notes. Mark Zuckerberg framed this as a necessary shift after years of what he considered excessive censorship. With the new model, Meta wants users themselves to have greater influence over what stays up or gets flagged, rather than relying heavily on centralized enforcement.

That this approach happens to mirror what U.S. President Donald Trump has publicly advocated for is, Meta insists, purely coincidental.

So how exactly is Meta deciding that this new direction is “working”?

Here’s what Meta highlights:

“Of the hundreds of billions of pieces of content produced on Facebook and Instagram in Q3 globally, less than 1% was removed for violating our policies and less than 0.1% was removed incorrectly. For the content that was removed, we measured our enforcement precision – that is, the percentage of correct removals out of all removals – to be more than 90% on Facebook and more than 87% on Instagram.”

Meta’s argument is that fewer incorrect takedowns indicate progress. Fewer mistaken removals, fewer upset users.

But there’s an obvious catch: if you’re removing less content overall, the chances of removing something incorrectly naturally fall too.

And the data reflects that shift clearly. Take Meta’s own reporting on “Bullying and Harassment.” The rate at which Meta detects and removes violative content before users report it has plunged over the past three quarters.

A 20% drop in proactive enforcement means users are now encountering more problematic content before Meta steps in.

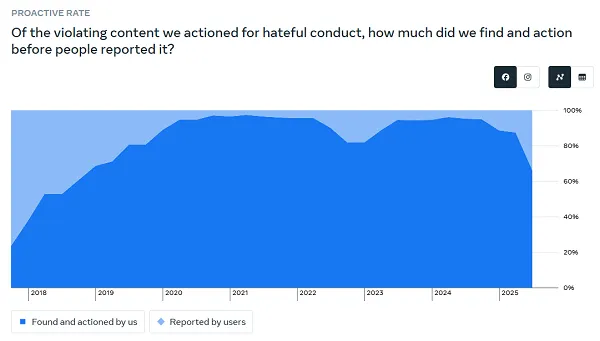

You see the same story unfold in Meta’s metrics on “Hateful Conduct”:

That sharp decline at the end isn’t subtle. It signals a tangible weakening of the systems responsible for flagging and removing hate-related content — historically one of the most sensitive categories in online discourse.

In other words, while Meta may be proudly pointing to “fewer mistakes,” the trade-off is unmistakable: its platforms are allowing more potentially harmful posts to circulate. And instead of presenting this as a compromise, Meta is framing it as an indicator of success — a win for “free expression.”

Yet when enforcement precision is the primary metric, it becomes nearly impossible to evaluate whether the Community Notes experiment is actually effective, or whether Meta is simply celebrating the consequences of fewer interventions.

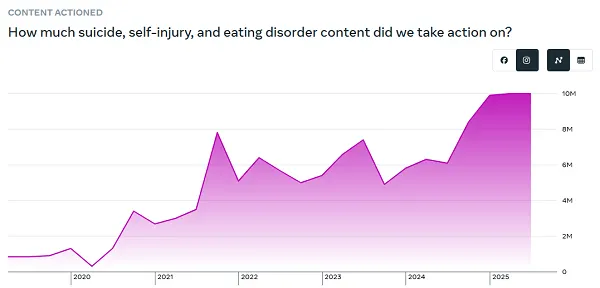

Looking closer at specific policy areas, Meta notes:

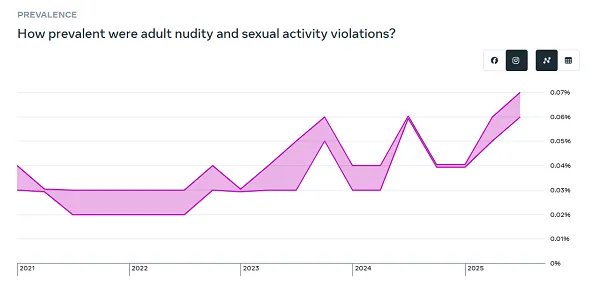

“On both Facebook and Instagram, prevalence increased for adult nudity and sexual activity, and for violent and graphic content, and on Facebook it increased for bullying and harassment. This is largely due to changes made during the quarter to improve reviewer training and enhance review workflows, which impacts how samples are labeled when measuring prevalence.”

Because Meta attributes these spikes to methodological changes, it’s unclear whether these are genuine increases in harmful content or simply the result of updated labeling processes.

Still, with global debates intensifying over youth exposure to social media, some of these upward trends raise questions — regardless of methodology.

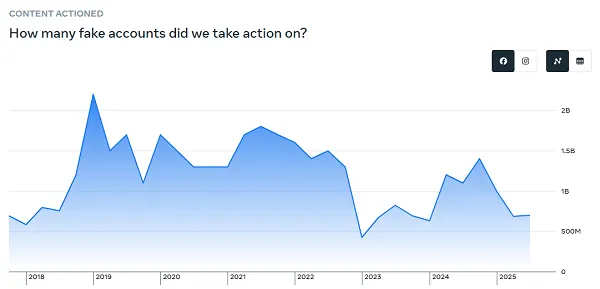

When it comes to fake accounts, Meta continues to insist that only about 4% of its 3-billion-plus monthly active users fall into that category.

Yet many users would argue that they encounter fake profiles far more frequently. And with the growing presence of AI-generated personas — some of which Meta itself is building — the distinction between “fake,” “AI-generated,” and “malicious” is blurrier than ever.

Even at 4%, though, we're still looking at more than 140 million inauthentic accounts — a staggering number that Meta openly acknowledges.

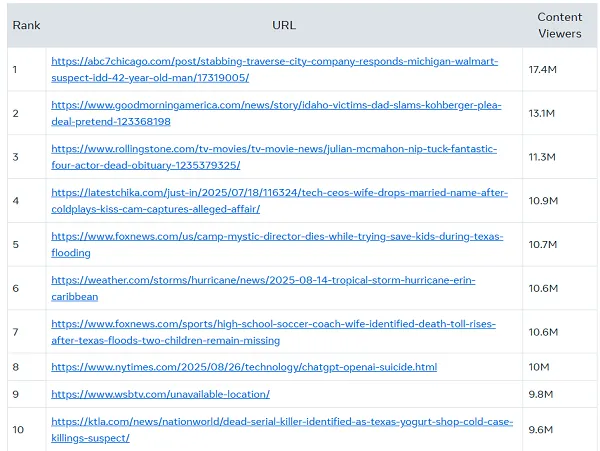

Meta has also refreshed its “Widely Viewed Content Report,” an initiative launched in 2021 to counter claims that Facebook disproportionately boosts divisive or misleading posts. By showing which posts actually reach the largest audiences in the U.S., Meta is trying to reshape the narrative around its influence.

The findings? The top-reaching posts are overwhelmingly centered on trending real-world events.

Crime stories, actor Julian McMahon’s passing, and the viral Coldplay concert moment — these dominated U.S. feeds in Q3.

Not political warfare. Not partisan misinformation. Just highly engaging, curiosity-driven stories. While that’s a mild improvement over the usual tabloid-heavy lists, the key message Meta wants to convey is simple: political content isn't dominating Facebook.

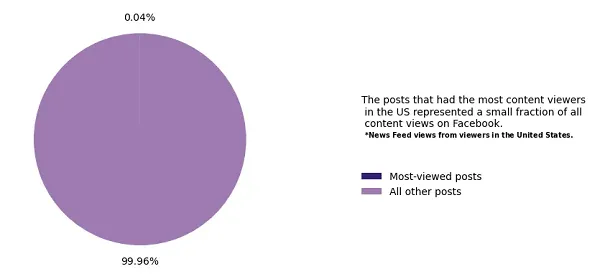

But a note of caution: the most-viewed posts represent only a sliver of overall activity.

At Meta’s scale, even a small slice of visibility can equate to millions of impressions. So while Meta is aiming for transparency, the data still gives only a partial picture of the platform’s true influence.

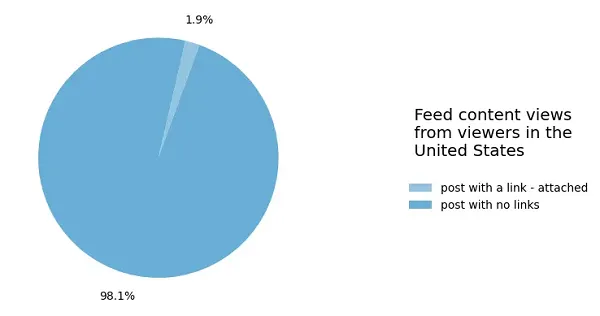

And then there’s this chart — the one that makes digital marketers collectively groan:

Link posts now make up a microscopic portion of what users see. If your goal is to push traffic through Facebook, expect disappointment.

The decline is steep: from 9.8% in 2022, to 2.7% earlier this year, and now even lower.

Taken together, Meta’s latest transparency updates present a mixed narrative. Yes, the company is making fewer enforcement errors. But the reduced moderation also appears to be opening the door to more questionable content — even if Meta is currently positioning that shift as progress.

In the end, whether the Community Notes model is truly succeeding depends on what you think matters more: fewer mistakes, or fewer harmful posts slipping through.